Explanation-Based Attention for Semi-Supervised Deep Active Learning

Published in LLD @ ICLR, 2019

Abstract

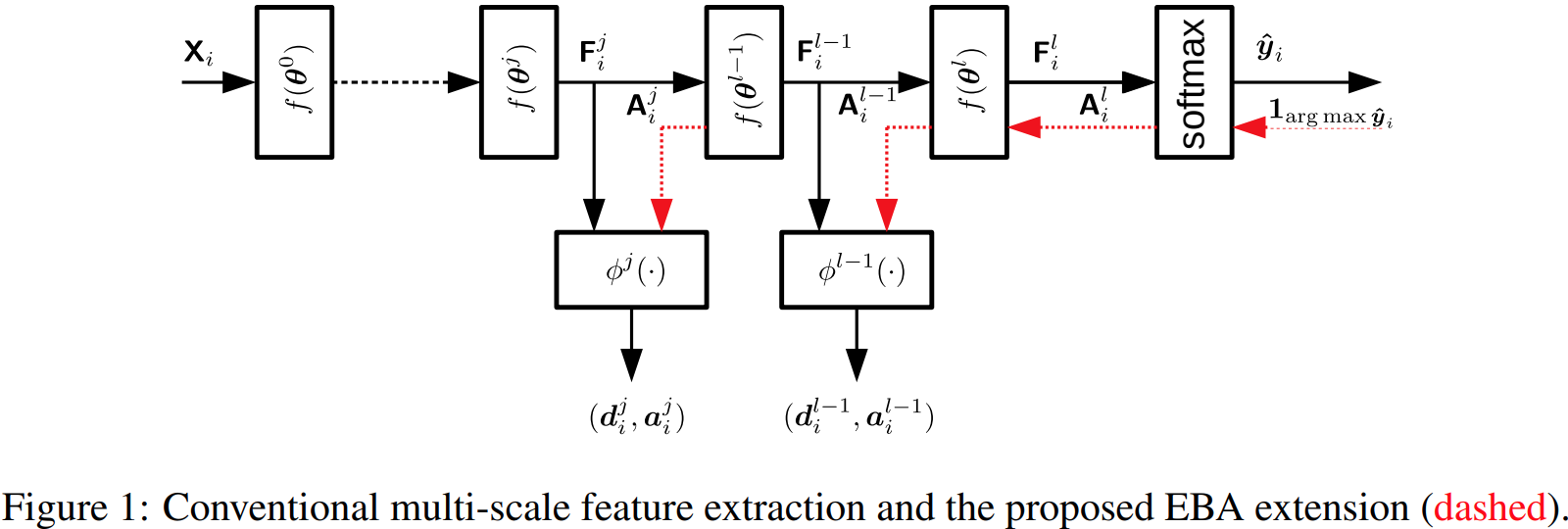

We introduce an attention mechanism to improve feature extraction for deep active learning (AL) in the semi-supervised setting. The proposed attention mechanism is based on recent methods to visually explain predictions made by DNNs. We apply the proposed explanation-based attention to MNIST and SVHN classification. The conducted experiments show accuracy improvements for the original and class-imbalanced datasets with the same number of training examples and faster long-tail convergence compared to uncertainty-based methods.

Bibtex

@inproceedings{eba,

author = {Denis Gudovskiy and Alec Hodgkinson and Takuya Yamaguchi and Sotaro Tsukizawa},

title = {Explanation-Based Attention for Semi-Supervised Deep Active Learning},

booktitle = {Proceedings of the LLD workshop at International Conference on Learning Representations (ICLR)},

year = {2019}

}