ShiftCNN: Generalized Low-Precision Architecture for Inference of Convolutional Neural Networks

Published in arXiv, 2017

Abstract

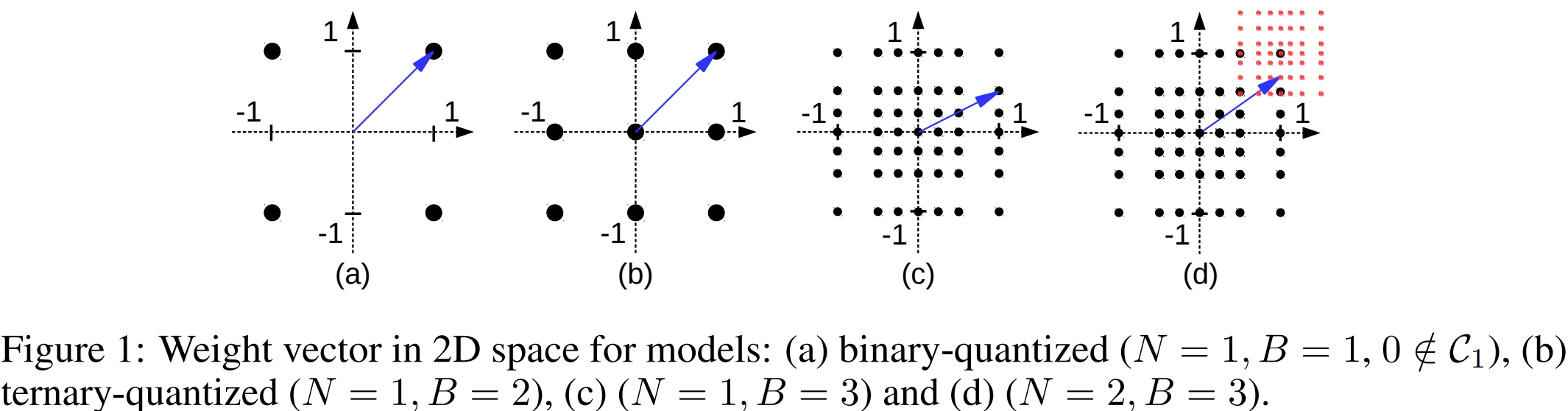

In this paper we introduce ShiftCNN, a generalized low-precision architecture for inference of multiplierless convolutional neural networks (CNNs). ShiftCNN is based on a power-of-two weight representation and, as a result, performs only shift and addition operations. Furthermore, ShiftCNN substantially reduces computational cost of convolutional layers by precomputing convolution terms. Such an optimization can be applied to any CNN architecture with a relatively small codebook of weights and allows to decrease the number of product operations by at least two orders of magnitude. The proposed architecture targets custom inference accelerators and can be realized on FPGAs or ASICs. Extensive evaluation on ImageNet shows that the state-of-the-art CNNs can be converted without retraining into ShiftCNN with less than 1% drop in accuracy when the proposed quantization algorithm is employed. RTL simulations, targeting modern FPGAs, show that power consumption of convolutional layers is reduced by a factor of 4 compared to conventional 8-bit fixed-point architectures.

Bibtex

@article{ShiftCNN,

author = {Denis Gudovskiy and Luca Rigazio},

title = {Shift{CNN}: Generalized Low-Precision Architecture for Inference of Convolutional Neural Networks},

journal = {arXiv:1706.02393},

year = {2017}

}